When you dive into the world of computers, one concept that often comes up is cache memory. It’s like the brain’s quick-access notes, helping your device run smoother and faster. But what exactly is it, and why should you care? In this article, we’ll break it down step by step, exploring its inner workings, types, benefits, and even how it’s evolving in 2025. Whether you’re a tech enthusiast, a student, or just curious about how your gadgets work, understanding cache memory can give you a real edge in appreciating modern computing.

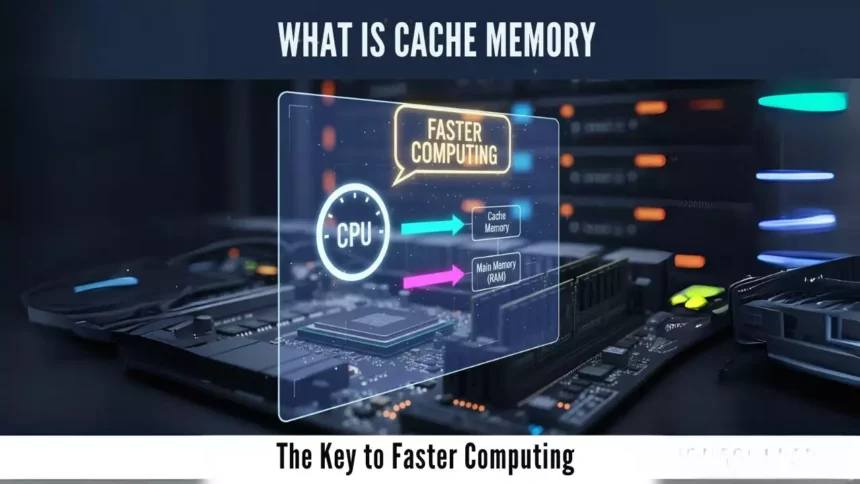

Let’s start with the basics. Cache memory is a high-speed storage component that acts as a buffer between the CPU and the main RAM. It stores copies of frequently accessed data and instructions, so the processor doesn’t have to fetch them from slower parts of the system every time. Imagine you’re reading a book and keep flipping back to the same page, cache memory is like having that page bookmarked for instant access. This setup significantly reduces latency and boosts overall performance, making it essential in everything from smartphones to supercomputers.

To put it in perspective, without cache memory, your computer would constantly trudge through the slower main memory, leading to frustrating delays. It’s a clever optimization that stems from the principle of locality of reference, where programs tend to access the same data repeatedly in a short period. By keeping this data close to the processor, cache memory ensures that operations happen at lightning speed. As we explore this topic, we’ll draw from established knowledge in computer science, including insights from sources like Wikipedia and recent tech discussions, to paint a complete picture.

The Evolution and Basics of Cache Memory

Cache memory didn’t just appear overnight; it’s a product of decades of innovation in computing. The concept dates back to the 1960s, but it became mainstream with the advent of microprocessors in the 1980s. At its core, cache memory is designed to bridge the gap between the ultra-fast CPU and the relatively sluggish DRAM (Dynamic Random Access Memory). This speed disparity arises because CPUs can process data in nanoseconds, while main memory access might take microseconds.

So, how does it work? When the CPU needs data, it first checks the cache. If the data is there, known as a cache hit, it’s retrieved almost instantly. If not, that’s a cache miss, the CPU has to fetch it from main memory, which is slower. To manage this, cache memory uses algorithms like least recently used (LRU) or first in, first out (FIFO) to decide what to keep or evict. This process is all about efficiency, ensuring that the most critical data is always at hand.

In 2025, we’re seeing advancements that make cache memory even more sophisticated. With the rise of AI and machine learning, caches are being optimized for predictive analytics, where systems anticipate data needs based on patterns. For instance, in edge computing devices, cache memory is integrated with neural processing units to handle real-time data processing without lag. This evolution isn’t just about speed; it’s about making computing more intuitive and responsive to user behaviors.

How Cache Memory Works in Detail

Diving deeper, let’s unpack the mechanics of cache memory. It’s essentially a hierarchy of storage levels, each serving a specific purpose. The process begins with the CPU issuing a request for data. If that data is in the cache, it’s a hit, and the operation proceeds swiftly. But if it’s a miss, the system triggers a fetch from lower levels, which could involve the memory controller accessing RAM.

One key aspect is the use of SRAM (Static Random Access Memory) for cache. Unlike DRAM, which requires constant refreshing to retain data, SRAM is faster and more reliable for short-term storage. This makes it ideal for cache, though it’s more expensive, which is why caches are kept small, typically ranging from a few kilobytes to several megabytes. For example, a modern CPU like those from Intel or AMD might have an L3 cache of up to 64MB, acting as a shared pool for multiple cores.

Cache memory also employs techniques like caching lines, where blocks of data are stored together. This leverages spatial locality, meaning if the CPU accesses one piece of data, nearby data is likely needed soon. In practice, this is managed through cache controllers that handle mapping, replacement policies, and coherence in multi-core systems. By 2025, with the push towards quantum computing, we’re exploring hybrid caches that combine traditional SRAM with emerging technologies like optical storage for even faster access times.

Real-world example: Think about web browsing. When you visit a website, your browser stores elements like images and scripts in its cache. The next time you load the page, it pulls from this local cache instead of downloading everything again, speeding up the process. This is a software-based cache, contrasting with the hardware cache in CPUs, but both serve the same fundamental purpose.

Types of Cache Memory

Cache memory isn’t one-size-fits-all; it comes in various forms, each tailored to specific needs. The primary distinction is between hardware and software caches. Hardware caches are built into the CPU or other chips, providing the fastest access. Software caches, on the other hand, are managed by operating systems or applications, like the in-memory cache used in web servers.

Within hardware caches, we have several subtypes. Instruction cache stores code that the CPU is executing, while data cache holds variables and other data. There’s also unified cache, which combines both, common in modern processors. In software, you might encounter disk cache, which uses RAM to buffer data from hard drives, reducing read times.

Another way to categorize is by persistence. Volatile caches lose data when power is cut, which is typical for most CPU caches. Non-volatile options, like those in some SSDs, retain data, adding reliability for critical applications. As we look to 2025, the integration of NVMe (Non-Volatile Memory Express) is blurring these lines, creating caches that offer both speed and durability.

To illustrate, let’s compare the main types in a table:

| Type of Cache | Primary Use Case | Speed (Relative) | Storage Medium | Typical Size |

|---|---|---|---|---|

| L1 Cache | On-chip storage for immediate access | Extremely fast | SRAM | 16KB – 128KB |

| L2 Cache | Secondary level for frequent data | Very fast | SRAM | 256KB – 8MB |

| L3 Cache | Shared among cores | Fast | SRAM or advanced | 4MB – 64MB |

| Software Cache | Application-level buffering | Variable | RAM or disk | Varies widely |

This table highlights how different caches balance speed, size, and cost, helping you understand their roles in a system.

Levels of Cache and Their Hierarchy

The cache hierarchy is a structured approach to memory management, typically divided into levels: L1, L2, and L3. L1 cache is the smallest and fastest, often split into instruction and data sections, and it’s embedded directly in the CPU core. L2 cache is a bit larger and serves as a backup, while L3 cache is shared across multiple cores in a processor, providing a larger pool for data sharing.

This multi-level system, known as the cache hierarchy, minimizes access times by creating a tiered structure. Data moves from main memory to L3, then L2, and finally L1 as it’s needed. In multi-core processors, cache coherence ensures that all cores see the same data, preventing conflicts, a crucial feature for parallel processing tasks.

By 2025, with the advent of heterogeneous computing, we’re seeing more complex hierarchies. For instance, in systems with integrated GPUs, there’s unified memory access that blends cache levels across CPU and graphics processing, enhancing performance in AI workloads. This evolution is driven by the need for efficiency in data-intensive applications like virtual reality and autonomous vehicles.

Advantages and Disadvantages of Cache Memory

Like any technology, cache memory has its pros and cons. On the positive side, it dramatically improves system performance by reducing the time spent waiting for data. This leads to faster boot times, quicker application launches, and better multitasking. In energy-efficient devices, it helps conserve power by allowing the CPU to idle less.

However, there are drawbacks. Cache memory is expensive to implement, which limits its size and increases hardware costs. Additionally, if the cache is poorly managed, it can lead to cache thrashing, where constant misses degrade performance. Security is another concern; vulnerabilities like Spectre and Meltdown have highlighted risks in cache designs, prompting ongoing mitigations.

In real-world applications, these advantages shine in high-performance computing. For example, in video editing software, cache memory accelerates rendering by keeping frame data readily available. Conversely, in embedded systems like IoT devices, the disadvantages might outweigh benefits if power consumption is a priority.

Real-World Applications and Examples

Cache memory isn’t just theoretical; it’s everywhere. In smartphones, it speeds up app switching and enhances gaming experiences. Web servers use in-memory caches to handle thousands of requests per second, as seen in frameworks like ASP.NET, where methods like SetAbsoluteExpiration allow precise control over cached data.

In databases, query caches store results of frequent queries, reducing database load. E-commerce sites rely on this to provide snappy user experiences during peak traffic. Looking ahead to 2025, with 5G and edge computing, cache memory will be vital for real-time data processing in smart cities and autonomous systems.

Consider a practical scenario: You’re streaming a video on Netflix. The app uses cache to buffer the next few seconds of content, preventing interruptions. If the cache is effective, you’ll enjoy seamless playback; if not, you might experience buffering, underscoring the technology’s impact on daily life.

Future Trends in Cache Memory

As we head into 2025, cache memory is evolving rapidly. Innovations in materials like MRAM (Magnetoresistive Random Access Memory) promise caches that are faster and more energy-efficient. We’re also seeing the integration of AI-driven caching, where machine learning algorithms predict and preload data based on user patterns, making systems more adaptive.

Another trend is the expansion into quantum computing, where quantum caches could handle superposition states for unprecedented speeds. In the consumer space, expect smarter caches in devices like wearables, optimizing for health data processing. These advancements will make computing more intuitive, but they also raise questions about privacy and data management.

Overall, the future of cache memory lies in balancing speed, size, and security, ensuring it keeps pace with the demands of an increasingly connected world.

Frequently Asked Questions

What is the difference between cache memory and RAM?

Cache memory and RAM both serve as temporary storage, but they differ in speed, size, and purpose. RAM, or main memory, is larger and holds the bulk of your system’s data, while cache memory is smaller and faster, acting as a high-speed intermediary. Typically, RAM uses DRAM, which is cost-effective for large capacities, whereas cache relies on SRAM for quicker access. In most cases, data flows from RAM to cache when needed by the CPU, enhancing overall efficiency. This hierarchy ensures that frequently accessed data is readily available, making your computer feel more responsive.

How does cache memory improve computer performance?

Cache memory boosts performance by storing copies of frequently used data closer to the CPU, reducing the time required for data retrieval. When the CPU requests data that’s already in the cache, a cache hit, it can access it almost immediately, avoiding slower trips to main memory. This is particularly beneficial in tasks involving repetitive operations, like gaming or video editing. However, the effectiveness depends on factors like cache size and hit rates, and in 2025, advancements in predictive caching are making this even more impactful for AI applications.

What are the levels of cache memory?

The levels of cache memory, often referred to as L1, L2, and L3, form a hierarchy in modern CPUs. L1 cache is the smallest and fastest, located directly on the processor core. L2 cache is larger and serves as a secondary layer, while L3 cache is shared among multiple cores and provides even more capacity. This setup allows for a graduated access speed, with data cascading from slower memory levels to faster ones as needed. In emerging trends, we’re seeing extensions like L4 caches in some high-end systems for additional performance gains.

Is cache memory volatile or non-volatile?

Most cache memory is volatile, meaning it loses its data when power is turned off, which is why it’s suited for temporary storage. This is in contrast to non-volatile options like SSD caches, which retain data. Volatile caches, built with SRAM, offer speed advantages but require data to be reloaded on startup. In 2025, hybrid approaches are gaining traction, combining volatile and non-volatile elements to balance performance and persistence, especially in edge devices where uninterrupted operation is key.

How can I clear cache memory on my device?

Clearing cache memory varies by device and software. On a Windows PC, you can use the Disk Cleanup tool or clear browser cache via settings. For Android devices, go to Settings > Storage > Cached data and clear it. This process removes temporary files, freeing up space and potentially improving performance, but it might slow down initial loads as data is recached. Generally, it’s a good practice for maintenance, though frequent clearing can negate some benefits of caching.

What is a cache miss, and how does it affect performance?

A cache miss occurs when the CPU requests data that’s not in the cache, forcing it to fetch from slower main memory. This increases access times and can bottleneck performance, especially in data-intensive tasks. To mitigate this, systems use strategies like increasing cache size or improving algorithms for better predictions. In practice, a high rate of cache misses might indicate inefficient memory usage, and understanding this can help optimize software for better efficiency.

Can cache memory be upgraded in a computer?

In most consumer devices, cache memory is integrated into the CPU and cannot be upgraded separately, as it’s part of the processor’s design. However, in servers or high-end workstations, you might upgrade to a CPU with larger caches. For software caches, like those in applications, you can adjust settings to allocate more RAM. By 2025, with modular computing designs, we might see more flexible options, but for now, it’s largely fixed.

How does cache memory relate to modern technologies like AI?

In AI and machine learning, cache memory plays a crucial role by accelerating data access for training models and inferences. AI frameworks often use specialized caches to store weights and activations, reducing computation times. As AI grows, so does the demand for larger, smarter caches that can handle complex patterns. This integration is evident in GPU architectures, where unified caches enhance parallel processing, making AI more accessible and efficient in everyday applications.

Hi, I’m Nghia Vo: a computer hardware graduate, passionate PC hardware blogger, and entrepreneur with extensive hands-on experience building and upgrading computers for gaming, productivity, and business operations.

As the founder of Vonebuy.com, a verified ecommerce store under Vietnam’s Ministry of Industry and Trade, I combine my technical knowledge with real-world business applications to help users make confident decisions.

I specialize in no-nonsense guides on RAM overclocking, motherboard compatibility, SSD upgrades, and honest product reviews sharing everything I’ve tested and implemented for my customers and readers.