No, a $2,000 RTX 5090 cannot replace a $30,000 H200 for AI workloads. These processors serve fundamentally different purposes. The H200 dominates in artificial intelligence inference and training with 141GB of specialized memory, while the RTX 5090 excels at gaming and graphics rendering with consumer-focused architecture.

What Is the Price Difference Between These GPUs?

The RTX 5090 costs approximately $2,000, representing Nvidia’s flagship consumer gaming graphics card. The H200, by contrast, carries a $30,000 price tag. This 15-fold price difference reflects vastly different engineering approaches, target markets, and manufacturing processes. For individual consumers and gaming enthusiasts, the RTX 5090 represents an expensive but accessible investment. For enterprise AI deployments, the H200 becomes economically sensible when multiplied across hundreds or thousands of units in data centers.

When organizations deploy AI infrastructure, they purchase GPUs in massive quantities. A data center might contain thousands of H200 units working in parallel. From this perspective, each individual GPU becomes a relatively small fraction of the total infrastructure investment. Even at $30,000 per unit, the cost per performance metric becomes reasonable when spread across enterprise budgets handling millions of daily transactions and computational requests.

What Are the H200 Technical Specifications?

The H200 represents cutting-edge data center architecture built on Nvidia’s GH100 die with 80 billion transistors. This processor features HBM3E memory configured on an exceptionally wide 6,144-bit bus. This memory interface delivers 4.8 terabytes per second of bandwidth, enabling rapid data movement between the processor and memory subsystem. The H200 operates at 1365 MHz base clock speeds and 1785 MHz boost speeds, considerably lower than consumer gaming GPUs.

The GPU contains nearly 16,896 shading units and over 528 tensor cores optimized specifically for mathematical operations common in artificial intelligence. Critically, the H200 lacks any graphics processing capabilities. It cannot render video through Vulkan, DirectX, or OpenGL APIs. This represents an intentional engineering decision. Nvidia removed graphics functions entirely to maximize silicon area dedicated to computation and memory subsystems. The H200 was built for one purpose: processing massive amounts of numerical data as efficiently as possible.

The processor was manufactured on TSMC’s advanced 5nm process node. This enables extremely dense transistor packing and efficient power delivery. The architecture emphasizes on-package memory through HBM stacks containing 12 layers each, with six separate stacks integrated directly onto the GPU package.

- See more: How to Build a PC: Complete 2025 Guide

Why Does the H200 Cost So Much to Manufacture?

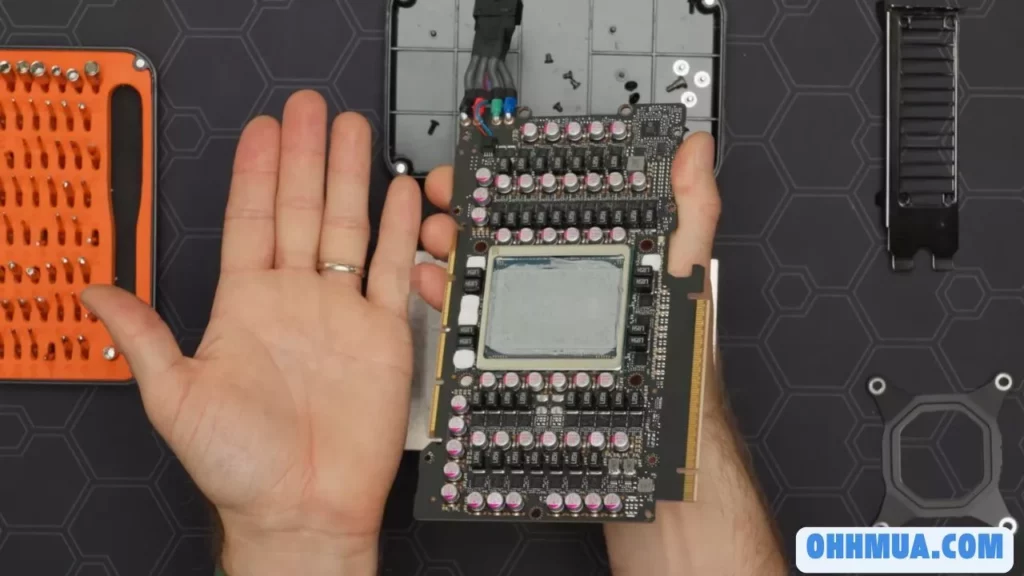

A detailed teardown revealed the H200’s complex engineering. The processor includes six separate HBM memory stacks, each containing 12 individual layers. These stacks connect almost directly to the GPU die, minimizing electrical distances and maximizing efficiency. Power delivery components throughout the package handle complex voltage regulation for different subsystems. The aluminum fins visible on the exterior are cosmetic, providing no thermal function, yet they highlight the packaging density.

Manufacturing yields significantly impact the H200’s cost. Each HBM stack requires perfect integration at every single layer. If any layer fails during manufacturing, the entire stack becomes unusable, and the whole GPU must be scrapped. A single defect in one of the 72 total HBM layers (12 layers across 6 stacks) means complete waste of the entire expensive package. This manufacturing fragility drives costs higher than conventional gaming GPUs where defects might affect smaller portions of the silicon.

The 5nm manufacturing process commands premium pricing. Production on such advanced nodes requires sophisticated equipment and yields significantly lower product quantities per wafer compared to older manufacturing nodes. When combined with the high defect rate from stacked memory integration, manufacturing costs soar. Each successful H200 GPU represents multiple failed units across the production line, necessitating high unit pricing for successful products.

How Does Power Consumption Compare Between These Cards?

Surprisingly, both the RTX 5090 and H200 draw approximately 600 watts of power under full load. This similarity appears counterintuitive given their vast performance differences. The answer lies in different engineering priorities. The RTX 5090 achieves high clock speeds running at considerably faster frequencies than the H200. This higher clock speed consumes more power per computation. The H200, running at lower clock speeds, compensates through dramatically larger memory capacity and bandwidth.

The H200’s 141 gigabytes of VRAM compared to the RTX 5090’s 32 gigabytes means substantially more data gets processed per second. This massive memory footprint, combined with the 4.8 terabyte-per-second bandwidth requirement, necessitates extensive power delivery and memory interface circuitry. Both cards ultimately reach similar power envelopes despite traveling different architectural paths.

At enterprise scale, small efficiency improvements become economically significant. Data centers operating thousands of GPUs see electricity bills in the hundreds of millions of dollars annually. Even a 5% efficiency improvement saves substantial money when multiplied across thousands of processors. This explains why enterprises invest heavily in the most power-efficient solutions despite higher initial costs. Long-term operational savings exceed the premium pricing over the equipment’s multi-year lifespan.

How Do These Cards Perform for AI Language Model Processing?

Direct performance testing revealed the H200’s dominance for artificial intelligence workloads. When compared against a dual AMD EPYC 9965 server configuration containing 400 processor cores and costing over $20,000, the results proved decisive. The H200 generated large language model responses at 122 tokens per second. The dual-CPU system managed only 21 tokens per second. The GPU completed complex prompts almost instantly while the CPU system struggled for minutes to process identical requests.

This performance gap stems from architectural fundamentals. CPUs excel at sequential task processing and complex decision-making. GPUs excel at parallel mathematical operations required for neural networks. Large language models primarily perform matrix multiplications, operations where GPUs enjoy thousands of simultaneous execution paths. Modern artificial intelligence work is fundamentally parallel in nature, perfectly aligned with GPU strengths and CPU weaknesses.

The H200’s 141 gigabytes of memory proved critical for this test. Processing advanced language models requires keeping enormous numerical arrays in immediate access. The RTX 5090’s 32 gigabytes becomes insufficient for enterprise-scale models. When working with GPT-4 OSS 120B models requiring 65 gigabytes, the RTX 5090 overflowed its memory capacity entirely. The gaming card fell back to system RAM, causing dramatic performance degradation. The H200 handled the same workload entirely within its on-package memory, maintaining peak performance.

What About Image Generation Performance?

For Stable Diffusion image generation using the SDXL model, the H200 showcased another overwhelming performance advantage. The professional GPU completed three high-quality images in seconds. The CPU-based system required over 7 minutes for identical work. The CPU system consumed 99 gigabytes of system memory and barely functioned, struggling through computational bottlenecks.

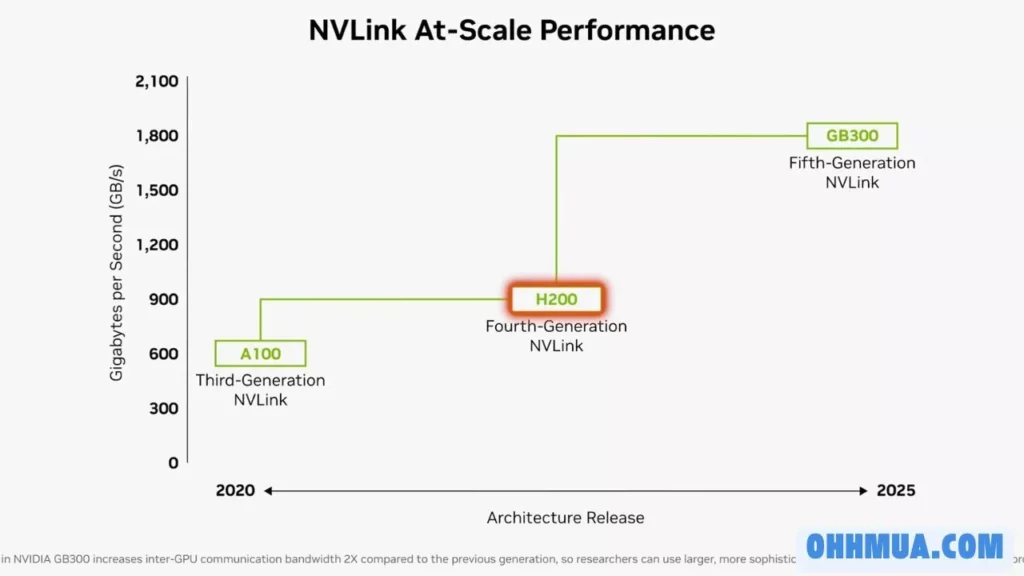

The H200’s NVLink interconnect capability provides additional advantages for this workload. Up to four H200 cards can communicate directly at 900 gigabytes per second bidirectional bandwidth. This represents approximately 14 times faster communication than PCIe Generation 5 connections available to consumer GPUs. When running distributed image generation across multiple H200s, data flows between processors with minimal latency, maintaining consistent performance.

Consumer gaming GPUs cannot achieve equivalent multi-card performance. The RTX 5090 lacks any direct GPU-to-GPU communication pathways. Multiple gaming cards communicate through the host CPU and system memory, introducing significant latency and bandwidth limitations. Enterprise workloads benefit enormously from the H200’s direct interconnect architecture, enabling truly scalable distributed processing across datacenter deployments.

How Do These Cards Compare for Traditional Gaming and Graphics?

The RTX 5090 completely dominates the H200 in traditional graphics workloads. When rendering complex scenes using Blender, the gaming GPU dramatically outperformed the professional AI processor. This result should surprise nobody. The RTX 5090 contains specialized graphics pipelines, texture units, and rasterization hardware. The H200 contains none of this. Gaming GPUs like the RTX 5090 evolved specifically to accelerate graphics rendering operations, while the H200 evolved specifically to avoid them.

The RTX 5090 offers GDDR7 memory optimized for the high bandwidth requirements of graphics work. While the RTX 5090’s memory bandwidth trails the H200’s theoretical capability, graphics work benefits from the memory’s access patterns and the specialized graphics cache hierarchy. The gaming card includes hardware specifically designed for texture filtering, depth testing, and other graphics-specific operations. The H200 lacks all these specialized structures.

For gaming, streaming, 3D animation, and visual design work, the choice is obvious. The RTX 5090 excels at its intended purpose. However, this represents a completely different domain from artificial intelligence processing. Attempting to use an H200 for gaming would be equally foolish. You would have the wrong tool for the job, regardless of raw computing power.

What About AI Model Training Performance?

Training artificial intelligence models requires different capabilities than inference. Testing with various model sizes revealed nuanced performance differences. The H200 finished 31% faster when training smaller models like Tiny Llama and mini instruct configurations. For larger 8-billion-parameter models, the H200’s superiority became absolute. The RTX 5090 simply could not train at full precision due to VRAM limitations. The gaming card overflowed its memory when attempting 8B parameter model training.

The story changes for extremely small models. When training YOLO V8 Nano with only 3 million parameters, the RTX 5090’s extra CUDA cores helped close the performance gap. Since the entire model fit comfortably in memory, memory bandwidth became less critical than raw computational throughput. The RTX 5090’s higher clock speeds and additional compute cores provided tangible benefits for memory-unconstrained workloads.

This reveals an important principle. GPU selection depends heavily on the specific workload characteristics. Memory-constrained workloads favor the H200’s enormous capacity and bandwidth. Compute-constrained workloads with small models favor the RTX 5090’s higher clock speeds. Enterprise deployments typically handle larger models where memory becomes the limiting factor, explaining why the H200 remains the standard choice despite its higher cost.

What Metrics Matter for Each Use Case?

For gaming and graphics work, frames per second, ray tracing performance, and shader throughput matter most. These metrics heavily favor the RTX 5090. Consumer users care about gaming performance, content creation speed, and value per dollar. The RTX 5090 delivers excellent value for these applications.

For artificial intelligence work, different metrics dominate. Tokens per second for language models, inference latency, and throughput per watt become critical. Model training speed, batch processing capability, and distributed training efficiency matter for enterprise deployments. The H200’s massive memory capacity and specialized architecture optimize for these completely different metrics.

The comparison ultimately involves comparing apples to oranges. Both are excellent processors, but for entirely different purposes. A better question than “which is better” might be “which is right for my specific needs?” For gaming, the answer is obviously the RTX 5090. For enterprise AI, the answer is obviously the H200. Attempting to use either for the other’s intended purpose would be wasteful and inefficient.

How Do These Cards Fit in Their Respective Markets?

The RTX 5090 addresses the consumer gaming and professional graphics market. Gamers building high-end systems, content creators working with 3D rendering, and visualization professionals represent its target audience. At $2,000, the card represents a significant investment for individuals but sits at a reasonable price point for professionals whose work generates income. The consumer market absorbs tens of thousands of RTX 5090 units annually.

The H200 addresses the enterprise artificial intelligence market. Data center operators, cloud service providers, and research institutions building AI infrastructure purchase these cards by the thousands. Individual H200 purchases are virtually non-existent. Large organizations negotiate volume pricing on multi-year contracts. A single enterprise might order 10,000 H200 units, making it the third-party’s largest GPU customer for years. This market structure enables sustainable $30,000 pricing through sheer volume.

Understanding market positioning clarifies why the price difference exists. Enterprise customers make purchasing decisions based on total cost of ownership, deployment timelines, and operational efficiency. They willingly pay premium prices for superior performance if it reduces power consumption, cooling requirements, and physical space. Individual consumers make purchasing decisions primarily on upfront cost and gaming performance. These different priorities explain the two markets and two completely different GPU designs.

| Specification | RTX 5090 | H200 |

|---|---|---|

| Retail Price | $2,000 | $30,000 |

| VRAM Capacity | 32 GB GDDR7 | 141 GB HBM3E |

| Memory Bandwidth | ~1.5 TB/s | 4.8 TB/s |

| Power Draw | 600W | 600W |

| GPU Architecture | Ada (Gaming) | GH100 (AI) |

| Graphics APIs | DirectX, Vulkan, OpenGL | None (AI-only) |

| LLM Inference Speed | Lower (32GB limit) | 122 tokens/sec |

| Best For | Gaming, Graphics | AI, Data Science |

Frequently Asked Questions

Can I use an RTX 5090 for professional AI work?

The RTX 5090 can run AI models but with significant limitations. The 32GB memory capacity restricts work to smaller models. For enterprise-scale language models and large datasets, the card becomes impractical. Many professionals do use RTX 5090s for experimentation and development before scaling to H200-based infrastructure.

Why can’t Nvidia just make gaming GPUs do AI better?

The hardware architectures optimize for different workloads. Graphics require specialized rendering pipelines and texture units that waste space for AI workloads. AI work benefits more from massive memory capacity and bandwidth, which consumes space graphics hardware doesn’t need. Nvidia essentially creates purpose-built tools for each market.

Is the H200 worth $30,000 for large organizations?

Yes, for organizations running massive AI operations. The total cost of ownership including electricity, cooling, and physical space often justifies the premium price. A single H200 might power thousands of user requests daily, making the per-request cost extremely low despite high unit cost.

Can you use RTX 5090s instead of H200s to save money?

Not for equivalent performance. While individual RTX 5090s cost 15 times less, you would need significantly more cards to achieve equivalent throughput due to memory limitations and reduced AI optimization. Total system costs often favor the H200 despite higher unit pricing.

What does NVLink do that PCIe cannot?

NVLink connects H200 cards directly at 900 GB/s bidirectional bandwidth. PCIe Generation 5 maxes out around 60 GB/s per direction. Direct GPU-to-GPU communication eliminates CPU bottlenecks when distributing work across multiple processors, essential for enterprise-scale AI workloads.

Which GPU should I buy for my needs?

Choose the RTX 5090 for gaming, content creation, and graphics work. Choose H200 only if you’re running an AI infrastructure company or equivalent enterprise deployment. The question isn’t really which is better, but which matches your actual use case.

Hi, I’m Nghia Vo: a computer hardware graduate, passionate PC hardware blogger, and entrepreneur with extensive hands-on experience building and upgrading computers for gaming, productivity, and business operations.

As the founder of Vonebuy.com, a verified ecommerce store under Vietnam’s Ministry of Industry and Trade, I combine my technical knowledge with real-world business applications to help users make confident decisions.

I specialize in no-nonsense guides on RAM overclocking, motherboard compatibility, SSD upgrades, and honest product reviews sharing everything I’ve tested and implemented for my customers and readers.