Introduction to Virtualization

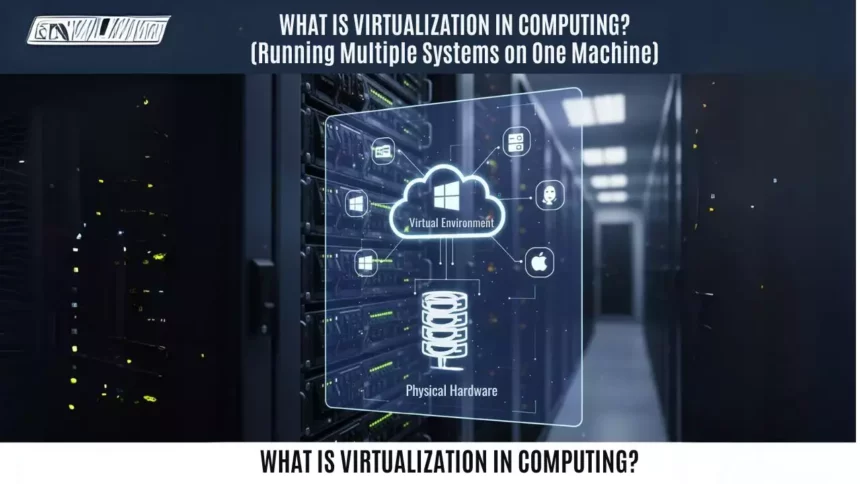

Have you ever wondered how a single computer can run multiple operating systems at the same time, or how massive data centers manage to handle thousands of tasks without breaking a sweat? That’s the magic of virtualization in computing. At its core, virtualization is a technology that allows us to create simulated environments from physical hardware, making it possible to divide resources like processors, memory, and storage into multiple independent units. This isn’t just a tech buzzword; it’s a foundational concept that’s revolutionized how we use computers, from personal devices to sprawling enterprise networks.

Imagine you’re a chef in a busy kitchen. Instead of having one massive oven for everything, you could use clever dividers to turn that oven into several smaller ones, each handling a different dish without interference. That’s essentially what virtualization does for computing resources. It started gaining prominence in the mid-20th century, but today, it’s everywhere, from the apps on your smartphone to the servers powering global websites. In this article, we’ll explore what virtualization is, how it works, its various forms, and why it’s more relevant than ever in our increasingly digital world. By the end, you’ll have a clear, practical understanding of this transformative technology, including its real-world applications and future potential.

To set the stage, let’s draw from reliable sources like Wikipedia, which defines virtualization as a set of technologies that partition physical computing resources into virtual ones, such as virtual machines, operating systems, or containers. This concept has evolved significantly since its inception, influencing areas like cloud computing, where resources are shared over the internet. As we delve deeper, we’ll see how virtualization not only optimizes efficiency but also paves the way for innovative solutions in industries from IT to automotive.

The History of Virtualization

To truly appreciate virtualization, we need to rewind to its origins. The story begins in the 1960s, a time when computing was still in its infancy. According to historical accounts, IBM played a pivotal role with the development of the CP/CMS system. This was one of the first practical implementations of virtualization, where the control program (CP) allowed multiple users to operate what felt like individual System/360 computers on a single machine. Think of it as time-sharing on steroids, enabling efficient use of expensive hardware in an era when computers were room-sized behemoths.

Fast-forward to the 1970s and 1980s, and virtualization started to mature with advancements in operating systems and hardware. Companies like VMware, founded in the late 1990s, brought virtualization to the mainstream with software that could run multiple virtual machines (VMs) on a single physical server. This was a game-changer for businesses, as it reduced the need for physical hardware and lowered costs. By the 2000s, with the rise of the internet, virtualization intertwined with cloud computing, as described in Wikipedia’s entry on the topic. Cloud computing relies heavily on virtualization to provide scalable, on-demand resources, allowing users to access shared pools of computing power without worrying about the underlying infrastructure.

In recent years, especially as we approach 2025, virtualization has expanded into new territories. For instance, in automotive technology, as highlighted in sources like Thundercomm’s development platforms, virtualization enables heterogeneous system-on-chip (SoC) architectures. This means vehicles can run multiple operating systems simultaneously for features like advanced driver assistance and infotainment, all while ensuring safety and efficiency. The evolution reflects a broader trend: from isolated mainframes to interconnected, virtualized ecosystems that support everything from remote work to AI-driven applications.

This historical perspective isn’t just academic; it shows how virtualization has adapted to technological shifts. Today, it’s a cornerstone of modern computing, driven by the need for flexibility, scalability, and resource optimization in a world where data demands are exploding.

What Exactly Is Virtualization?

At its simplest, virtualization is the process of creating a virtual version of something, like hardware, that behaves as if it were physical. In computing, this typically involves using software to emulate physical devices. For example, a hypervisor, a key piece of software in virtualization, acts as a intermediary layer between the physical hardware and the virtual machines it hosts. This allows multiple operating systems to run on one machine without them interfering with each other.

Let’s break it down further. When you boot up a virtual machine, you’re essentially allocating a slice of the host computer’s resources, such as CPU cycles, RAM, and disk space, to create an isolated environment. This isolation is crucial for security and performance. Imagine running a Windows operating system on a Mac; virtualization software like VirtualBox makes this possible by mimicking the necessary hardware components.

One common misconception is that virtualization is the same as emulation. While both create simulated environments, emulation translates instructions from one architecture to another, which can be slower. Virtualization, on the other hand, runs natively on the hardware, making it more efficient. This distinction is important for applications like cloud computing, where speed and reliability are paramount.

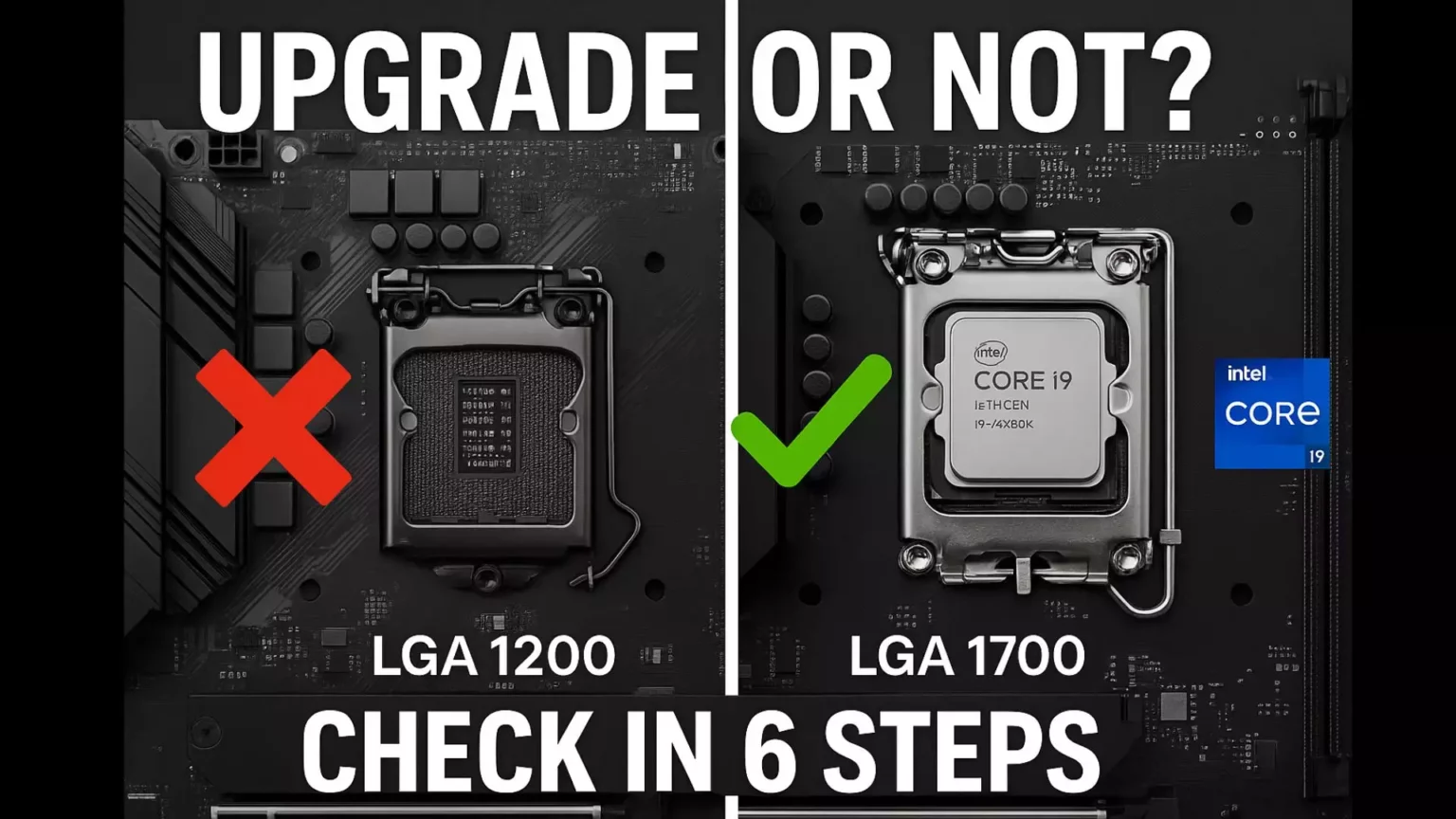

In practice, virtualization can be applied at different levels. Hardware virtualization involves the CPU and other components, while software virtualization deals with applications. As we move into 2025, advancements in hardware support, such as Intel’s and AMD’s latest processors with built-in virtualization extensions, are making this technology even more seamless and powerful.

Types of Virtualization

Virtualization isn’t a one-size-fits-all solution; it comes in various forms, each tailored to specific needs. Let’s explore the main types, drawing from general knowledge and verified sources to provide a comprehensive overview.

Server Virtualization

This is perhaps the most widely used form. In server virtualization, a single physical server is divided into multiple virtual servers, each running its own operating system. This is achieved through a hypervisor, which could be Type 1 (bare-metal, like VMware ESXi) or Type 2 (hosted, like Oracle VirtualBox). The benefits are enormous: it maximizes server utilization, reduces energy costs, and simplifies management.

For instance, in a data center, you might have one physical server hosting several virtual machines for different applications, such as a web server, a database, and an email system. This setup, often seen in cloud computing environments, allows for easy scaling and disaster recovery. According to trends projected for 2025, server virtualization will continue to evolve with AI integration, enabling predictive resource allocation.

Desktop Virtualization

Ever needed to access your work desktop from home? That’s where desktop virtualization shines. It involves running a user’s desktop environment on a remote server, which is then accessed via a thin client or even a web browser. Technologies like Virtual Desktop Infrastructure (VDI) make this possible, as mentioned in sources discussing popular solutions.

With desktop virtualization, IT departments can manage and secure endpoints more effectively. For example, if an employee’s laptop is lost, the data remains safe on the server. In 2025, we can expect enhancements in user experience, with faster remote connections and better integration with mobile devices, making remote work even more efficient.

Network Virtualization

This type abstracts network resources, allowing you to create virtual networks that operate independently of the physical infrastructure. Think of it as slicing a physical network into multiple logical ones. Professor Raouf Boutaba’s work on network virtualization, as referenced in Google Scholar, highlights its role in managing complex systems like those in cloud resource allocation.

Network virtualization is crucial for software-defined networking (SDN), where traffic can be routed dynamically. In real-world scenarios, it helps in creating isolated environments for testing or segregating traffic in large enterprises. Looking ahead, with the growth of 5G and edge computing, network virtualization will play a key role in optimizing data flow.

Storage Virtualization

Here, physical storage devices are pooled and managed as a single repository. This makes it easier to allocate space on demand and handle data backups. For example, in a virtualized storage system, you could automatically expand a virtual machine‘s disk without physically adding hardware.

As we approach 2025, storage virtualization is integrating with AI for predictive analytics, helping prevent data loss and improving efficiency.

Application Virtualization

This isolates applications from the underlying operating system, allowing them to run in a sandboxed environment. Tools like Docker for containers exemplify this, providing lightweight virtualization that’s ideal for development and deployment.

Each type has its own use cases, and often, they’re used in combination for comprehensive solutions.

To make this clearer, here’s a quick comparison table of the main types:

| Type | Primary Use | Key Benefits | Challenges |

|---|---|---|---|

| Server Virtualization | Optimizing physical servers | High resource utilization, cost savings | Potential performance overhead |

| Desktop Virtualization | Remote access to desktops | Enhanced security, easy management | Relies on stable network connections |

| Network Virtualization | Managing network resources | Flexibility in routing, scalability | Complexity in configuration |

| Storage Virtualization | Efficient data management | Simplified storage allocation | Data integrity risks if not managed properly |

This table highlights how each type addresses different needs, helping you choose the right one for your scenario.

How Virtualization Works

Under the hood, virtualization relies on a few core components. The hypervisor is the star of the show, acting as a virtual operating system that manages hardware resources and schedules them for virtual machines. When a VM requests resources, the hypervisor intercepts and allocates them accordingly.

For example, if two VMs are competing for CPU time, the hypervisor uses scheduling algorithms to ensure fair distribution. This process, known as multiplexing, is what makes virtualization efficient. In hardware-assisted virtualization, features like Intel VT-x or AMD-V enhance performance by offloading some tasks to the CPU.

In cloud computing, this translates to on-demand scaling. Providers like AWS use virtualization to spin up instances quickly, allowing users to pay only for what they use. As we look to 2025, improvements in quantum computing and edge virtualization will likely make these processes even faster and more secure.

Benefits and Challenges of Virtualization

The advantages of virtualization are compelling. It boosts efficiency by allowing better resource use, reduces hardware costs, and enhances disaster recovery through easy backups and migrations. In cloud computing, it enables rapid deployment, which is vital for businesses adapting to market changes.

However, it’s not without drawbacks. Performance can suffer if resources are over-allocated, and security risks increase with more virtual machines to manage. Additionally, the initial setup requires expertise, and in 2025, we’ll need to address new challenges like quantum threats to virtualization security.

Real-World Applications

From healthcare to finance, virtualization is everywhere. In automotive, as per Thundercomm’s platforms, it’s used for safe, high-performance computing in vehicles. In education, it allows students to experiment with different operating systems without risk.

The Future of Virtualization

By 2025, virtualization will integrate deeply with AI and edge computing, offering smarter, more autonomous systems.

Conclusion

In summary, virtualization is a powerful tool that continues to shape computing.

Frequently Asked Questions

What is the difference between virtualization and cloud computing?

Virtualization is the underlying technology that enables cloud computing, but they aren’t the same. Virtualization creates virtual environments on physical hardware, while cloud computing is a service model that delivers these virtual resources over the internet on a pay-as-you-go basis. In most cases, cloud providers use virtualization to offer scalable services, making it easier for businesses to access computing power without maintaining their own infrastructure.

How does virtualization improve security?

Virtualization enhances security by isolating workloads in separate virtual machines, which prevents issues in one environment from affecting others. For example, if a virtual machine is compromised, the hypervisor can contain the damage. However, it also introduces new risks, like hypervisor vulnerabilities, so proper configuration and regular updates are essential for maintaining a secure setup.

Can I use virtualization on my personal computer?

Absolutely, and it’s quite straightforward with tools like VirtualBox or Hyper-V. You can run multiple operating systems on your PC for testing or development. Keep in mind that it requires sufficient hardware resources, such as a modern CPU with virtualization support, to avoid performance issues.

What are the hardware requirements for virtualization?

Typically, you’ll need a 64-bit processor with extensions like Intel VT-x or AMD-V, at least 4GB of RAM, and enough storage space. For optimal performance, ensure your system has a solid-state drive and multiple cores. As technology advances into 2025, even budget hardware is expected to support basic virtualization features.

Is virtualization cost-effective for small businesses?

In many cases, yes. It reduces the need for physical servers, lowering hardware and energy costs. Small businesses can use virtualization to consolidate resources and scale as needed. However, the initial investment in software and training might be a barrier, so it’s wise to start with cloud-based solutions for easier entry.

How does virtualization impact the environment?

Virtualization promotes sustainability by optimizing resource use, which means fewer physical servers are needed, reducing energy consumption and e-waste. According to general trends, data centers using virtualization can cut their carbon footprint significantly, making it a green choice for eco-conscious organizations.

What skills are needed to work with virtualization?

A solid understanding of networking, operating systems, and scripting languages like PowerShell or Python is beneficial. Certifications such as VMware Certified Professional can also help. As we move toward 2025, skills in AI and automation will become increasingly important for managing virtualized environments.

Can virtualization be used in mobile devices?

Yes, though it’s more common in enterprise settings. Technologies like containerization allow apps to run in isolated environments on smartphones. In 2025, with the rise of 5G, we might see more advanced mobile virtualization for secure, on-the-go computing.

Hi, I’m Nghia Vo: a computer hardware graduate, passionate PC hardware blogger, and entrepreneur with extensive hands-on experience building and upgrading computers for gaming, productivity, and business operations.

As the founder of Vonebuy.com, a verified ecommerce store under Vietnam’s Ministry of Industry and Trade, I combine my technical knowledge with real-world business applications to help users make confident decisions.

I specialize in no-nonsense guides on RAM overclocking, motherboard compatibility, SSD upgrades, and honest product reviews sharing everything I’ve tested and implemented for my customers and readers.