Introduction to Deep Learning

Deep learning has transformed the way we interact with technology, powering everything from voice assistants to self-driving cars. At its core, deep learning is a subset of machine learning that uses complex algorithms modeled after the human brain to process vast amounts of data and uncover patterns that traditional methods might miss. This field draws inspiration from biological neuroscience, where artificial neurons are stacked into layers to mimic how our brains learn from experiences.

To understand deep learning, let’s start with its origins. The concept emerged in the 1950s with early neural network models, but it gained significant momentum in the 2010s thanks to advancements in computing power and big data. Today, it’s a cornerstone of artificial intelligence, enabling machines to perform tasks like image recognition, language translation, and predictive analytics with remarkable accuracy. For instance, platforms like social media use deep learning to analyze user behavior and recommend content, making our digital experiences more personalized.

One key aspect that sets deep learning apart is its use of multilayered neural networks. These networks consist of interconnected nodes, or neurons, organized in layers: an input layer that receives data, hidden layers that process it, and an output layer that delivers results. The “deep” in deep learning refers to the presence of multiple hidden layers, which allow the system to handle intricate data relationships. According to sources like Wikipedia, deep learning methods can be supervised, where labeled data guides the training, semi-supervised, which mixes labeled and unlabeled data, or unsupervised, where the algorithm finds patterns on its own.

As we delve deeper, it’s fascinating to see how deep learning has been adopted across academia and industry. For example, the interactive book “Dive into Deep Learning” from D2L.ai, used in over 500 universities worldwide, highlights practical implementations with code from various frameworks. This accessibility has democratized the field, allowing beginners to experiment with Python libraries like TensorFlow or PyTorch. In everyday terms, deep learning isn’t just about fancy tech, it’s about solving real problems, like detecting diseases from medical images or optimizing traffic flow in smart cities. By the end of this article, you’ll grasp not only the basics but also how to apply these concepts in innovative ways.

The Foundations of Neural Networks

Before we dive into the intricacies of deep learning, it’s essential to build a solid foundation in neural networks, the building blocks of this technology. A neural network is essentially a computational model inspired by the human brain’s structure, consisting of layers of interconnected nodes that process information through weighted connections. Each node, or neuron, takes in inputs, applies a mathematical function, and passes the output to the next layer, allowing the network to learn from data iteratively.

The basic unit of a neural network is the artificial neuron, which simulates a biological neuron by receiving signals, processing them, and firing an output if the signal is strong enough. This process involves weights that determine the importance of each input and a bias term that shifts the output. For example, in a simple feedforward network, data flows from the input layer through hidden layers to the output, with each connection adjusted during training to minimize errors. This adjustment is handled by algorithms like backpropagation, which calculates the gradient of the loss function and updates the weights accordingly.

Deep learning expands on these basics by stacking multiple layers, creating what’s known as a deep neural network. These layers enable the model to capture hierarchical features: the first layer might detect edges in an image, while deeper layers recognize more complex patterns like shapes or objects. Research from sources like Wikipedia emphasizes that deep learning draws from various techniques, including convolutional neural networks (CNNs) for visual data and recurrent neural networks (RNNs) for sequential data. A key advantage is the ability to learn from unstructured data, such as raw images or text, without needing manual feature engineering.

In practice, training a neural network requires substantial data and computational resources. For instance, using a GPU-accelerated setup, you might run code in Jupyter Notebook to train a model on datasets like MNIST for handwritten digit recognition. This hands-on approach reveals how deep learning models improve over time through epochs of training, where the network iterates on the data to reduce errors. However, it’s not without challenges; issues like overfitting, where the model performs well on training data but poorly on new data, require techniques like dropout or regularization to mitigate.

Looking ahead to 2025 trends, we’re seeing neural networks evolve with hybrid approaches, such as combining them with reinforcement learning for autonomous systems. In fields like healthcare, deep learning models are being used to analyze biomedical data, as highlighted in recent studies on compressed sensing and AI applications. Overall, understanding these foundations equips you to appreciate the power of deep learning and experiment with it yourself, whether you’re building a simple classifier or tackling advanced projects.

Key Architectures in Deep Learning

When it comes to deep learning, the architecture of the neural network is what defines its capabilities and applications. Different architectures are designed to handle specific types of data and tasks, making them versatile tools in the AI toolkit. Let’s explore some of the most influential ones, drawing from established knowledge in the field.

First up is the fully connected neural network, often the starting point for beginners. In this setup, every neuron in one layer is connected to every neuron in the next, allowing for comprehensive data processing. It’s great for tasks like regression or classification on tabular data, but it can become inefficient with large datasets due to its high computational demands. For example, in a fully connected network, you might use the ReLU activation function to introduce non-linearity, helping the model learn complex patterns without saturating.

Then there’s the convolutional neural network (CNN), a powerhouse for image and video processing. CNNs use filters to scan input data, capturing local features like edges and textures through convolutional layers. This architecture, inspired by the visual cortex of the brain, has revolutionized computer vision tasks, such as object detection in photos. A classic example is using CNNs in facial recognition systems, where layers progressively identify features from basic shapes to full faces. Research from sources like IEEE papers shows CNNs being applied to vulnerability detection in blockchain, demonstrating their adaptability.

Another key architecture is the recurrent neural network (RNN), which excels at sequential data like time series or natural language. Unlike feedforward networks, RNNs have loops that allow information to persist, making them ideal for tasks involving context over time, such as predicting stock prices or generating text. However, traditional RNNs struggle with long-term dependencies, leading to the development of variants like long short-term memory (LSTM) networks, which use gates to control information flow and prevent vanishing gradients.

More recently, generative adversarial networks (GANs) have emerged as a creative force in deep learning. GANs consist of two networks, a generator and a discriminator, that compete against each other to produce realistic data, such as synthetic images or music. This adversarial training has applications in art, data augmentation, and even drug discovery. Additionally, transformers, introduced in 2017, have taken the spotlight for their efficiency in handling sequences without RNNs, powering models like those behind chatbots and language translation services.

As we look toward 2025, new architectures are blending these elements, such as vision transformers that apply transformer mechanisms to images. According to general trends in AI, these innovations are making deep learning more accessible and efficient, with frameworks like TensorFlow providing pre-built models for rapid prototyping. Whether you’re designing a network for speech recognition or predictive maintenance, choosing the right architecture is crucial for success.

Training and Optimization Techniques

Training a deep learning model is where the magic happens, but it’s also one of the most intricate parts of the process. It involves feeding data into a neural network, adjusting parameters, and optimizing performance to achieve accurate predictions. Let’s break this down step by step, incorporating insights from reliable sources to ensure a thorough understanding.

The training process begins with preparing your dataset, which should be large, diverse, and cleaned to avoid biases. In supervised learning, you pair inputs with labels, allowing the model to learn from examples. A common technique is backpropagation, which computes the error at the output and propagates it backward through the layers to update weights. This is typically done using an optimizer like stochastic gradient descent (SGD), which minimizes the loss function by adjusting parameters in small steps.

Optimization goes beyond basic training; it includes strategies to improve efficiency and accuracy. For instance, batch normalization helps stabilize the learning process by normalizing the inputs to each layer, reducing the risk of vanishing or exploding gradients. Another technique is dropout, which randomly drops neurons during training to prevent overfitting, ensuring the model generalizes well to new data. In practice, you might use tools like Google Colab to run experiments, tweaking hyperparameters such as learning rate or number of epochs to fine-tune results.

Advanced methods, like transfer learning, leverage pre-trained models on massive datasets, such as ImageNet, and adapt them to specific tasks. This is particularly useful in resource-constrained environments, where training from scratch isn’t feasible. Research from Springer publications highlights how these techniques are applied in biomedical fields, such as using deep learning for disease prediction with limited data. By 2025, we’re seeing a shift toward automated techniques, like neural architecture search, which uses algorithms to design optimal networks.

Ethical considerations are also key in optimization. Ensuring fairness in training data helps mitigate biases that could lead to discriminatory outcomes, such as in facial recognition systems. Techniques like data augmentation, artificially expanding your dataset through transformations, can enhance robustness. Overall, mastering these methods not only boosts model performance but also prepares you for real-world deployment.

Real-World Applications of Deep Learning

Deep learning’s true value shines in its diverse applications across industries, solving problems that were once thought impossible. From healthcare to finance, this technology is driving innovation and efficiency, as evidenced by various studies and real-world implementations.

In healthcare, deep learning is revolutionizing diagnostics through convolutional neural networks that analyze medical images for early detection of conditions like cancer. For example, models trained on X-rays can identify anomalies with accuracy rivaling that of human experts, potentially saving lives through timely interventions. Springer books on the topic discuss how AI integrates with soft computing for biomedical applications, including personalized treatment plans based on patient data.

The transportation sector benefits from deep learning in autonomous vehicles, where recurrent neural networks process sensor data to make split-second decisions. Companies like Tesla use these systems to navigate complex environments, reducing accidents and improving traffic flow. Looking ahead to 2025, expect advancements in edge computing, allowing vehicles to process data on-device for faster responses.

In finance, deep learning powers fraud detection and algorithmic trading. By analyzing transaction patterns, models can flag suspicious activity in real-time, protecting users from cyber threats. Reinforcement learning, a subset of deep learning, is used to optimize investment strategies, learning from market fluctuations to maximize returns. This ties into broader AI applications, as noted in Wikipedia’s overview of artificial intelligence in finance.

Other areas, like education and entertainment, see deep learning enhancing user experiences. Adaptive learning platforms use it to tailor content to individual students, while streaming services recommend movies based on viewing history. As we integrate these technologies, the focus remains on ethical AI, ensuring privacy and accessibility for all.

Challenges and Ethical Considerations

Despite its benefits, deep learning isn’t without hurdles. Challenges range from technical limitations to ethical dilemmas, requiring careful navigation to ensure responsible use.

One major issue is the need for massive datasets, which can lead to privacy concerns and data biases. For instance, if training data is skewed, the model might perpetuate inequalities, as seen in some facial recognition systems. Ethical frameworks are emerging to address this, emphasizing transparency and fairness in AI development.

Computational demands also pose problems, with models requiring powerful hardware like GPUs. This can exclude smaller organizations, widening the AI gap. By 2025, solutions like federated learning aim to mitigate this by training models on decentralized data without compromising privacy.

Future Trends in Deep Learning

Looking ahead, deep learning is poised for exciting advancements, shaping the next wave of AI innovation by 2025 and beyond.

Emerging trends include the integration of quantum computing, which could accelerate training times for complex models. Additionally, explainable AI is gaining traction, making neural networks more interpretable for critical applications like healthcare.

Frequently Asked Questions

What is the difference between deep learning and machine learning?

Deep learning is a subset of machine learning that uses multilayered neural networks to automatically learn features from data, while machine learning encompasses broader techniques that may require manual feature engineering.

How does deep learning work?

Deep learning works by processing data through layers of artificial neurons in a neural network, adjusting weights via backpropagation to minimize errors and improve predictions over time.

What are some popular tools for deep learning?

Popular tools include frameworks like TensorFlow and PyTorch, which provide libraries for building and training neural networks efficiently.

Is deep learning suitable for beginners?

Yes, with accessible resources and online courses, beginners can start with basic neural networks, though it requires understanding of math and programming.

What industries benefit most from deep learning?

Industries like healthcare, finance, and transportation benefit greatly, as deep learning enhances diagnostics, fraud detection, and autonomous systems.

What are the limitations of deep learning?

Deep learning requires large datasets and computational power, and it can suffer from overfitting or biases if not managed properly.

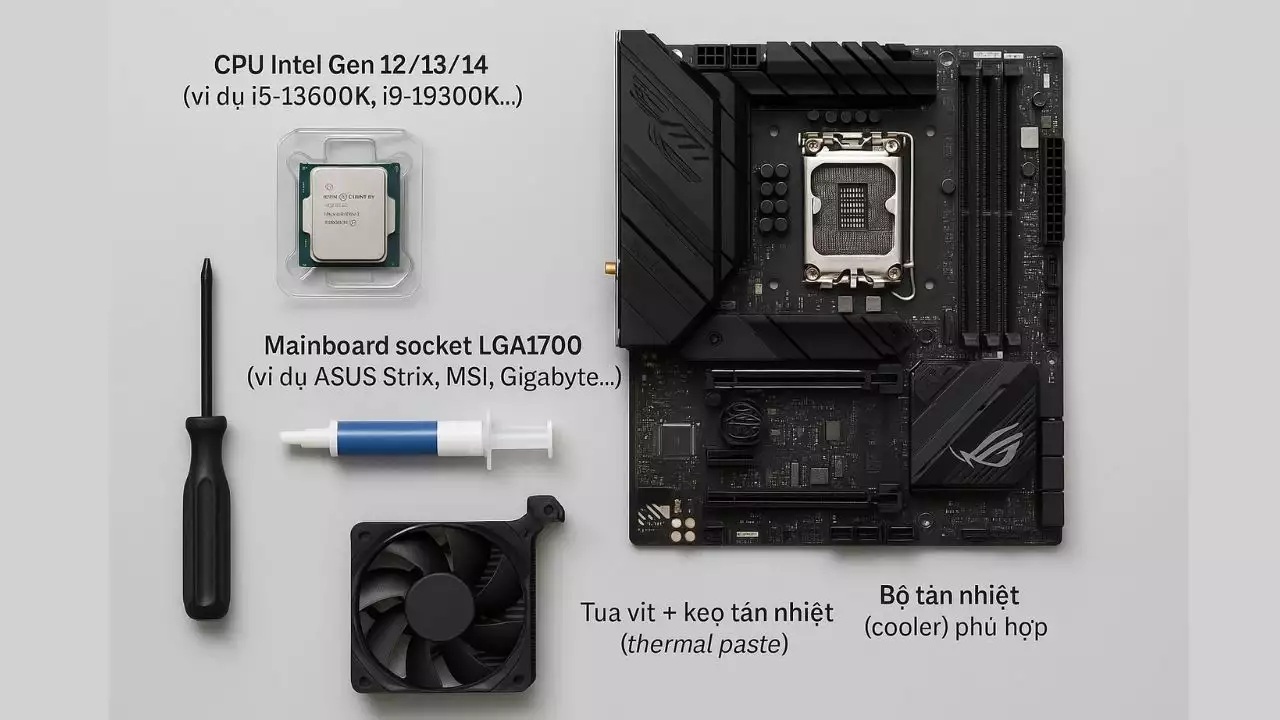

Hi, I’m Nghia Vo: a computer hardware graduate, passionate PC hardware blogger, and entrepreneur with extensive hands-on experience building and upgrading computers for gaming, productivity, and business operations.

As the founder of Vonebuy.com, a verified ecommerce store under Vietnam’s Ministry of Industry and Trade, I combine my technical knowledge with real-world business applications to help users make confident decisions.

I specialize in no-nonsense guides on RAM overclocking, motherboard compatibility, SSD upgrades, and honest product reviews sharing everything I’ve tested and implemented for my customers and readers.