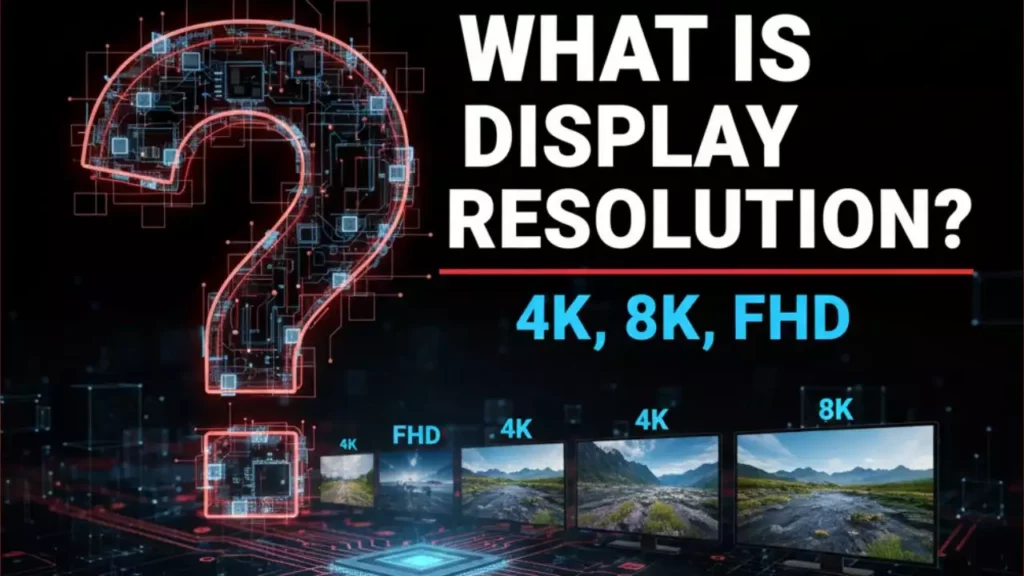

Understanding Display Resolution

In an increasingly visual world, the quality of our screens profoundly impacts how we interact with technology, consume media, and even perform our daily tasks. From the smartphone in your pocket to the massive television dominating your living room, the clarity and detail of the images displayed are paramount. At the heart of this visual experience lies a fundamental concept: display resolution. It’s a term frequently tossed around in tech discussions, often accompanied by acronyms like FHD, 4K, and 8K, but what exactly do these terms mean, and why should they matter to you?

Understanding display resolution is akin to understanding the canvas upon which all digital images are painted. It dictates the sharpness, intricacy, and overall fidelity of what you see. As technology advances at a breakneck pace, the pursuit of higher resolutions continues, promising ever more immersive and lifelike visuals. This exploration will demystify display resolution, breaking down its core components, examining the popular FHD, 4K, and 8K standards, and guiding you through the considerations for choosing the perfect display for your unique needs. Whether you’re a hardcore gamer, a cinephile, a creative professional, or simply someone looking to upgrade their home entertainment, a clear grasp of resolution is your first step towards an optimal visual journey.

What is Display Resolution?

At its simplest, display resolution refers to the number of individual pixels that a screen can display, both horizontally and vertically. Think of a screen as a grid, much like a checkerboard. Each square on that grid is a tiny, illuminated point called a pixel (short for “picture element”). The resolution is typically expressed as “width x height” , for example, 1920 x 1080. This means the screen has 1920 pixels across its width and 1080 pixels stacked vertically. Multiplying these two numbers gives you the total number of pixels on the screen. For 1920 x 1080, that’s over 2 million pixels working together to form an image.

The higher the number of pixels, the more detailed and sharper the image can appear. More pixels allow for finer lines, smoother curves, and a greater capacity to render intricate textures and subtle color gradations. This is why a higher resolution display can show more information on screen at once or display existing content with greater clarity. Beyond just the raw pixel count, another important related concept is pixel density, often measured in pixels per inch (PPI). This metric considers both the resolution and the physical size of the screen. A small screen with a high resolution will have a very high PPI, making images incredibly sharp, whereas a very large screen with the same resolution will have a lower PPI, potentially revealing individual pixels if viewed too closely. The interplay between these factors determines the ultimate visual experience.

Importance of Display Resolution in Technology

The significance of display resolution in modern technology cannot be overstated; it’s a foundational element that influences nearly every aspect of our digital lives. Firstly, it directly impacts visual fidelity. Higher resolutions mean sharper images, more detailed text, and a greater sense of realism in everything from photographs to video games. This enhanced clarity reduces eye strain during prolonged use, as images are less pixelated and easier to discern. For professionals working with detailed graphics, design, or high-resolution imagery, a higher resolution display provides critical precision, allowing them to see fine details without constant zooming.

Secondly, resolution dictates screen real estate. A higher pixel count allows more content to be displayed simultaneously on the screen. For instance, on a 4K monitor compared to an FHD one of the same size, you can comfortably arrange multiple windows side-by-side or view larger spreadsheets without excessive scrolling. This boosts productivity for tasks involving multitasking, coding, data analysis, or complex creative suites. Furthermore, display resolution is crucial for immersion in entertainment. Whether it’s the breathtaking landscapes in a modern video game or the cinematic sweep of a high-budget film, a higher resolution display pulls you deeper into the content, making the experience more lifelike and engaging. The evolution of resolution has also been a driving force behind advancements in related display technologies, such as HDR (High Dynamic Range), which further enhances contrast and color, complementing the increased pixel density to deliver a truly superior visual output.

Types of Display Resolutions

The landscape of display resolutions has evolved dramatically over the past two decades, moving from standard definition to the ultra-high definition displays we see today. While many resolutions exist, three primary categories dominate the consumer market: Full HD (FHD), 4K, and 8K. Each represents a significant leap in pixel count and visual potential, catering to different needs, budgets, and technological capabilities. Understanding the specifics of each of these will help clarify their roles in the current ecosystem of devices, from personal laptops and monitors to large-screen televisions and specialized professional displays.

The progression through these resolution tiers isn’t just about adding more pixels; it’s about pushing the boundaries of what’s visually possible, creating displays that can render images with astonishing clarity and depth. However, with each step up, comes increased demands on supporting hardware, content availability, and often, a higher price tag. This section will break down each of these major resolution types, detailing their specifications, common applications, and what makes them distinct in the ever-expanding world of digital visuals.

Full HD (FHD)

Full HD, often abbreviated as FHD or referred to as 1080p, has been the ubiquitous standard for high-definition displays for well over a decade. Its pixel dimensions are 1920 pixels horizontally by 1080 pixels vertically, totaling just over 2 million pixels. The “p” in 1080p stands for progressive scan, meaning all lines of the image are drawn in sequence from top to bottom, providing a stable and flicker-free picture, as opposed to interlaced scan (1080i) which draws odd and even lines alternately.

FHD displays quickly became the baseline for televisions, computer monitors, laptops, and smartphones due to their excellent balance of visual quality, affordability, and performance. For most everyday tasks such as web browsing, document editing, and video conferencing, FHD offers more than sufficient sharpness. In the realm of entertainment, a vast library of movies, TV shows, and video games are produced and distributed in FHD, making it a highly compatible and accessible format. While newer, higher resolutions have emerged, FHD remains a strong contender, particularly for budget-conscious consumers, competitive gamers who prioritize high frame rates over ultimate pixel density, and for devices where screen size or viewing distance doesn’t warrant the expense of 4K or 8K. Its widespread adoption means that hardware requirements (like GPU power) are relatively modest, allowing for smooth operation across a broad range of systems.

4K Resolution

4K resolution, also widely known as Ultra High Definition (UHD), represents a significant leap forward from FHD. Its standard pixel dimensions are 3840 pixels horizontally by 2160 pixels vertically. This translates to roughly four times the total pixels of an FHD display, packing over 8 million pixels onto the screen. The “4K” moniker primarily refers to the approximately 4,000 horizontal pixels, distinguishing it from the 1080p standard which is named after its vertical pixel count.

The advent of 4K has revolutionized the visual experience, particularly for large-screen televisions and high-end computer monitors. The quadrupled pixel count delivers an astonishing level of detail, making images incredibly sharp and lifelike. Text appears crisper, edges are smoother, and fine textures in photos and videos are rendered with remarkable clarity. This makes 4K displays ideal for immersive media consumption, such as watching movies and TV shows from streaming services like Netflix, Disney+, and Amazon Prime Video, which offer a growing library of 4K HDR content. Gamers with powerful graphics cards can also enjoy breathtaking visual fidelity and deeper immersion in their favorite titles. Beyond entertainment, 4K monitors are highly valued by creative professionals, including graphic designers, video editors, and photographers, as they provide an expansive workspace and the precision needed for detailed work. The increased pixel density allows for more content to be displayed without sacrificing clarity, enhancing productivity for multitasking.

8K Resolution

Stepping into the vanguard of display technology, 8K resolution pushes the boundaries of visual fidelity even further. With a staggering pixel dimension of 7680 pixels horizontally by 4320 pixels vertically, an 8K display boasts over 33 million pixels. To put this into perspective, that’s four times the pixel count of 4K and a monumental sixteen times that of Full HD. The jump from 4K to 8K is just as significant as the leap from FHD to 4K, promising an unprecedented level of detail and realism.

Currently, 8K technology is at the bleeding edge of the consumer market. It is predominantly found in very large, premium televisions and a select few specialized professional monitors. The primary advantage of 8K is its ability to render images with such incredible sharpness that, at typical viewing distances, individual pixels become virtually imperceptible, creating a truly seamless and “window-like” visual experience. This hyper-realistic clarity is particularly impactful on screens 65 inches and larger, where the benefits of increased pixel density become more apparent. For professional applications, such as medical imaging, high-end film production, and scientific visualization, 8K offers unparalleled precision. However, the 8K ecosystem is still in its infancy. Native 8K content is extremely limited, and the hardware required to drive an 8K display , including powerful GPUs and high-bandwidth connectivity like HDMI 2.1 , is both expensive and demanding. While it represents the ultimate in current display technology, 8K is largely a future-proofing investment for early adopters and specific niche markets.

Comparison of Display Resolutions

Understanding the individual specifications of FHD, 4K, and 8K is crucial, but their differences become truly apparent when viewed in direct comparison. The evolution from one resolution to the next isn’t merely incremental; it’s exponential in terms of pixel count, leading to profound differences in visual quality, common applications, and the underlying technological demands. This comparison aims to highlight these distinctions, providing a clearer picture of where each resolution stands in the current display landscape.

The most straightforward way to compare these resolutions is by their raw pixel dimensions and the resulting total pixel count. As we move from FHD to 4K and then to 8K, the number of pixels quadruples at each major step. This increase directly correlates with the amount of detail a screen can display. However, the perceived “visual quality” isn’t solely about the numbers; it’s also influenced by screen size, viewing distance, and the quality of the content being displayed. A small FHD screen viewed from a close distance might appear just as sharp as a larger 4K screen viewed from further away due to similar pixel density at the viewer’s eye. Nonetheless, the inherent capability of higher resolution displays to render finer details remains a core differentiator.

To put these resolutions into perspective, let’s look at a direct comparison:

| Resolution | Pixel Dimensions (Width x Height) | Total Pixels (Approx.) | Common Uses | Visual Quality |

|---|---|---|---|---|

| FHD | 1920 x 1080 | 2.07 million | TVs, Laptops, Mainstream Monitors | Good, Clear, Standard |

| 4K | 3840 x 2160 | 8.3 million | Gaming, Streaming, Professional Work, High-End TVs | Very Good, Sharp, Detailed |

| 8K | 7680 x 4320 | 33.2 million | High-End TVs, Cinema, Specialized Professional Applications | Excellent, Ultra-Realistic, Seamless |

This table provides a quick overview, but the true impact lies in the details. FHD remains a solid choice for general use, especially where budget or hardware limitations are a concern. Its pixel density is perfectly adequate for typical laptop and monitor sizes, and for TVs viewed from a reasonable distance. 4K has become the new standard for premium visual experiences, offering a discernible upgrade in sharpness and detail that significantly enhances gaming, movie watching, and professional productivity. The content ecosystem for 4K is robust, with widespread support from streaming services, game developers, and content creators. 8K, on the other hand, is a luxury technology. While it offers unparalleled pixel density, its benefits are most pronounced on very large screens (65 inches and above) and at close viewing distances. The lack of native 8K content means that much of what is displayed on an 8K screen is upscaled from lower resolutions, relying heavily on sophisticated processing to fill in the extra pixels. This upscaling can be impressive, but it’s not the same as native 8K content. Furthermore, the hardware demands for 8K are immense, requiring the latest graphics cards and high-bandwidth cables like HDMI 2.1 to transmit the massive amounts of data required. The choice between these resolutions often boils down to a balance of desired visual quality, budget, the size of the display, and the specific applications it will be used for.

Advantages and Disadvantages of Each Resolution

Each display resolution, from the tried-and-true FHD to the cutting-edge 8K, comes with its own set of strengths and weaknesses. These pros and cons are not absolute but rather contextual, depending heavily on the user’s needs, budget, and the specific technological ecosystem they operate within. Understanding these trade-offs is paramount when making an informed decision about your next display purchase. What might be an advantage for one user, such as affordability, could be a disadvantage for another, who prioritizes ultimate visual fidelity.

The rapid pace of technological advancement means that what was once a high-end feature quickly becomes mainstream. As we delve into the advantages and disadvantages of FHD, 4K, and 8K, we’ll consider factors like cost, hardware requirements, content availability, and the overall user experience. This detailed breakdown will illuminate why each resolution holds its particular place in the market and help you weigh the benefits against the drawbacks for your specific situation.

Advantages of FHD

Full HD (FHD), with its 1920 x 1080 pixel count, still holds a significant position in the display market due to several compelling advantages. Firstly, affordability is a major draw. FHD displays are significantly less expensive than their 4K or 8K counterparts, making them accessible to a wider consumer base for everything from budget laptops to entry-level televisions and monitors. This cost-effectiveness extends beyond the display itself, as the hardware required to drive FHD content (such as graphics cards) is also considerably less demanding and thus more affordable.

Secondly, FHD boasts near-universal compatibility. Almost all digital content, from web videos to mainstream video games and television broadcasts, is available in FHD. You won’t struggle to find content that looks good on an FHD screen, and you won’t encounter significant upscaling issues. For gamers, particularly those focused on competitive titles, FHD often allows for much higher frame rates and refresh rates (e.g., 144Hz, 240Hz, or even higher) even with mid-range GPUs. This smooth performance can be a critical advantage in fast-paced games where every millisecond counts. Finally, for many typical use cases on screens up to about 27 inches for monitors or 40-50 inches for TVs (depending on viewing distance), FHD provides a perfectly good visual quality that is sharp enough for comfortable viewing without visible pixels.

Disadvantages of FHD

Despite its widespread adoption and advantages, Full HD (FHD) displays do come with notable limitations, especially when compared to higher-resolution alternatives. The most significant disadvantage is the relatively lower pixel density on larger screens. While FHD looks perfectly sharp on a 13-inch laptop, stretching those same 2 million pixels across a 55-inch television can result in a noticeably softer image where individual pixels become visible at closer viewing distances. This lack of sharpness can detract from the immersive experience, particularly for movies and high-detail games.

Another drawback is the reduced screen real estate for productivity. With fewer pixels, you have less digital workspace. This means you might need to scroll more frequently or resize windows to fit everything on screen, which can hinder multitasking for professional users like graphic designers, video editors, or programmers who benefit from seeing more content at once. While FHD content is abundant, the visual experience of 4K HDR content is undeniably superior, offering greater detail, color depth, and contrast that FHD simply cannot replicate. As 4K becomes the new standard for premium content, FHD users might feel they are missing out on the best possible viewing experience. Lastly, from a future-proofing perspective, FHD is slowly being phased out as the premium standard. While it will remain relevant for many years, new flagship devices and cutting-edge content are increasingly designed with 4K (and even 8K) in mind, meaning FHD displays will eventually be considered entry-level.

Advantages of 4K

4K resolution, also known as Ultra HD (UHD), has rapidly become the sweet spot for many consumers, offering a compelling blend of stunning visual quality and growing accessibility. Its primary advantage is the dramatic increase in detail and sharpness. With four times the pixels of FHD, 4K displays render images with incredible clarity, making text crisper, graphics more lifelike, and fine details in photos and videos stand out. This enhanced fidelity creates a far more immersive experience for movies, TV shows, and video games.

For productivity, 4K monitors offer significantly more screen real estate. Professionals in fields like graphic design, video editing, architecture, and software development can comfortably open multiple applications side-by-side without feeling cramped, or view large documents and timelines with greater context. This boost in workspace can lead to increased efficiency. The content ecosystem for 4K is also robust and rapidly expanding. Major streaming services like Netflix, Disney+, Amazon Prime Video, and YouTube offer a vast library of 4K HDR content, providing a premium viewing experience. Many new video games are also optimized for 4K, allowing players with powerful hardware to enjoy unparalleled visual quality. Furthermore, 4K displays are becoming increasingly affordable, moving from luxury items to mainstream options, making the upgrade more attainable for a broader audience while still providing a significant visual improvement over FHD.

Disadvantages of 4K

While 4K resolution offers numerous benefits, it’s not without its drawbacks, which can be significant depending on your setup and expectations. One of the most prominent disadvantages is the increased demand on hardware. To run games or edit high-resolution video natively at 4K, you need a powerful and often expensive graphics card (GPU). Mid-range GPUs might struggle to achieve desirable frame rates at 4K, especially in demanding games, potentially forcing users to lower graphical settings or resolution to achieve smooth performance.

Another consideration is cost. While 4K displays are becoming more affordable, they are still generally more expensive than comparable FHD models. This cost extends to supporting peripherals, such as higher-bandwidth HDMI 2.0 or DisplayPort 1.2+ cables, and potentially faster internet connections for seamless 4K streaming. Content file sizes are also significantly larger for 4K, requiring more storage space and longer download times. For users with limited internet bandwidth, 4K streaming can consume data caps quickly or suffer from buffering issues. Lastly, while the 4K content ecosystem is growing, not everything is available in native 4K. Older content or certain niche productions might still be limited to FHD, meaning your 4K display will be upscaling the content, which, while often effective, doesn’t provide the same crispness as native 4K. Some operating systems and applications can also have scaling issues, leading to tiny text or UI elements that are difficult to read without adjusting scaling settings, which can sometimes introduce blurriness.

Advantages of 8K

8K resolution represents the pinnacle of current consumer display technology, offering a level of visual fidelity that truly stands apart. The most striking advantage is the unparalleled detail and realism. With over 33 million pixels, an 8K display renders images with such astonishing clarity that individual pixels become virtually indistinguishable to the human eye at typical viewing distances, even on very large screens. This creates an incredibly smooth, seamless, and “window-like” visual experience, making content appear almost three-dimensional in its depth and texture.

For large screen sizes (65 inches and above), 8K truly shines, providing a sense of immersion that lower resolutions cannot match. The increased pixel density on these vast canvases allows viewers to sit closer to the screen without perceiving any pixelation, pulling them deeper into the content. This is particularly impactful for applications like cinema, where the goal is to fully envelop the audience. From a future-proofing perspective, investing in an 8K display ensures you have the highest possible resolution for years to come, ready for when 8K content becomes more prevalent. Furthermore, even with lower-resolution content, the sophisticated upscaling engines found in premium 8K TVs are incredibly advanced, using AI and machine learning to fill in missing pixels and enhance image quality to a degree that often surpasses what a native 4K display could achieve with the same source. For highly specialized professional fields such as scientific visualization, medical imaging, or high-end film post-production, 8K provides critical precision and the ability to view immense datasets or extremely detailed footage without compromise.

Disadvantages of 8K

Despite its breathtaking visual potential, 8K resolution currently faces significant hurdles and comes with several substantial disadvantages that limit its practicality for most consumers. The most prominent barrier is cost. 8K displays are extremely expensive, representing a premium investment far beyond 4K models. This high price point is often prohibitive for the average consumer, making it a luxury item for early adopters or niche professional markets.

Another major drawback is the severe lack of native 8K content. While 4K content is abundant, genuine 8K movies, TV shows, or video games are exceedingly rare. This means that an 8K display will spend most of its time upscaling 4K or even FHD content. While 8K TVs feature advanced upscaling technology, it’s still an approximation and cannot replicate the true crispness of native 8K. This diminishes the primary benefit of the higher resolution for everyday viewing. Furthermore, the hardware requirements for 8K are immense. Running games or editing video at native 8K demands the absolute top-tier graphics cards, which are themselves very expensive. The bandwidth required for 8K is also substantial, necessitating the latest HDMI 2.1 cables and compatible ports on both the display and the source device, which adds to the overall cost and complexity. 8K content files are also enormous, requiring vast amounts of storage and exceptionally fast internet connections for streaming or downloading. For most users, at typical viewing distances and screen sizes below 65 inches, the visual difference between 4K and 8K is often negligible or imperceptible, leading to diminishing returns on the significant investment.

Choosing the Right Resolution for Your Needs

Navigating the landscape of display resolutions can feel overwhelming, especially with the constant push for higher pixel counts. However, the “best” resolution isn’t a universal standard; it’s a highly personal choice that depends entirely on your specific needs, primary use cases, budget, and even your viewing environment. There’s no single answer that fits everyone, and what’s ideal for a hardcore gamer might be overkill for someone primarily watching casual TV.

Making an informed decision requires careful consideration of several key factors. You need to think about what you’ll primarily be using the display for, how much you’re willing to spend, the size of the screen you’re looking for, and how long you expect the display to remain relevant. This section will break down these considerations, offering practical advice tailored to different user profiles and helping you choose a resolution that provides the optimal balance of performance, visual quality, and value for your investment.

Considerations for Gaming

For gamers, choosing the right display resolution is a critical decision that balances visual fidelity with performance. The ideal choice often depends on the type of games you play and the power of your gaming PC or console.

For competitive esports gamers, Full HD (FHD) at 1920 x 1080 often remains the preferred choice. The reason is simple: frame rate and refresh rate are paramount. An FHD monitor can typically achieve much higher frame rates (e.g., 144 frames per second (FPS) or more) with a mid-range GPU, especially when paired with a high refresh rate monitor (144Hz, 240Hz, or 360Hz). This results in extremely fluid motion, reduced input lag, and a competitive edge where every millisecond counts. The lower pixel count means less strain on the GPU, allowing for maximum performance.

For immersive single-player experiences and graphically rich AAA titles, 4K resolution at 3840 x 2160 offers a truly breathtaking visual upgrade. The incredible detail and sharpness make game worlds come alive, enhancing environments, character models, and special effects. However, to maintain playable frame rates at 4K, you’ll need a very powerful, high-end graphics card (e.g., an NVIDIA RTX 4080/4090 or AMD RX 7900 XTX or newer). Even with top-tier hardware, achieving consistently high frame rates (e.g., 60 FPS or higher) in the most demanding titles can be challenging, often requiring the use of DLSS (Deep Learning Super Sampling) or FSR (FidelityFX Super Resolution) upscaling technologies. The visual trade-off is often worth it for those who prioritize stunning graphics over raw frame rate.

8K resolution for gaming is currently largely impractical. The hardware demands are immense, requiring multiple top-tier GPUs (which is rarely supported by modern games) or next-gen graphics cards that are still years away from mainstream adoption to render games natively at 7680 x 4320 with acceptable frame rates. While 8K TVs exist, the gaming experience on them is typically limited to 4K with upscaling, or significantly reduced graphical settings. For the foreseeable future, 4K represents the pinnacle of visually stunning gaming, while FHD remains king for competitive performance. Don’t forget to also consider monitor size, response time, and HDR capabilities, as these also play a significant role in the overall gaming experience.

Considerations for Streaming and Media Consumption

When it comes to streaming and general media consumption, the choice of display resolution heavily influences the quality of your entertainment experience, yet it’s not solely about the highest pixel count. Factors like content availability, internet bandwidth, and the capabilities of your streaming devices are equally important.

For most casual viewers, Full HD (FHD) at 1920 x 1080 still provides a perfectly enjoyable viewing experience. A vast majority of content on platforms like YouTube, Netflix, and traditional broadcast television is available in FHD. If you have a standard internet connection or are watching on a smaller screen (like a laptop or a TV up to 40-50 inches), FHD offers good clarity without demanding excessive bandwidth. It’s also the most budget-friendly option for TVs and monitors.

However, for those seeking a premium cinematic experience, 4K resolution at 3840 x 2160 is the current gold standard. The difference in detail and sharpness from FHD is immediately noticeable, especially on larger screens (55 inches and above). The 4K content ecosystem is incredibly robust, with major streaming services offering extensive libraries of movies and TV shows in 4K HDR. The combination of 4K’s increased pixel density and HDR’s enhanced contrast and color depth delivers a truly immersive and lifelike picture. To fully enjoy 4K streaming, you’ll need a stable and fast internet connection (typically 25 Mbps or higher) and a compatible 4K HDR television or monitor, along with a 4K-capable streaming device (e.g., Apple TV 4K, Roku Ultra, Fire TV Stick 4K Max).

8K resolution at 7680 x 4320 is currently overkill for most media consumption. Native 8K content is extremely scarce, limited to a few experimental productions or specialized demos. While 8K TVs feature advanced upscaling technology to make 4K or FHD content look better, the improvement over a high-quality native 4K display is often marginal at typical viewing distances. The substantial cost of 8K displays, coupled with the lack of content and the immense bandwidth requirements (often 50-100 Mbps for future 8K streaming), makes it a poor value proposition for general media consumption in 2025. For the best balance of quality, content availability, and cost-effectiveness, 4K is undoubtedly the top choice for streaming and media consumption.

Future-Proofing Your Display

Future-proofing a display involves making a purchasing decision that anticipates future technological advancements and content trends, ensuring your investment remains relevant and delivers a high-quality experience for years to come. In the context of display resolution, this means considering not just what’s available today, but what will become mainstream tomorrow.

Currently, 4K resolution stands as the most practical and effective choice for future-proofing. The 4K content ecosystem is mature and continues to expand rapidly across streaming services, gaming, and professional media. Most new content is produced with 4K in mind, and the underlying hardware to support 4K (like graphics cards and HDMI 2.0/2.1 ports) is widely available and becoming more affordable. Investing in a good 4K HDR display today ensures you can enjoy the vast majority of high-quality content released over the next 5-7 years without feeling technologically behind. Look for displays with HDMI 2.1 ports if you’re connecting to next-gen consoles or powerful PCs, as this standard supports higher refresh rates at 4K and is a prerequisite for 8K.

8K resolution, while offering the ultimate in pixel density, is still too far ahead of its time for most consumers to be considered a sensible future-proofing investment. The 8K content ecosystem is virtually non-existent, and the hardware required to drive it is prohibitively expensive and often underutilized. While 8K displays come with advanced upscaling capabilities, relying solely on upscaling for the majority of your content negates the primary benefit of native 8K. It’s a technology that will likely take many more years to mature and become mainstream, by which time current 8K displays may be outdated in other aspects (like panel technology or processing power).

For those on a tighter budget, FHD still offers a solid, if not cutting-edge, experience. However, it’s less “future-proof” than 4K as new content increasingly targets higher resolutions. The best strategy for future-proofing is to invest in a high-quality 4K HDR display with modern connectivity (like HDMI 2.1) that meets your current needs, rather than overspending on 8K for a future that hasn’t fully arrived yet. The money saved on an 8K display could be better allocated to a more powerful GPU or other components that enhance your overall computing or entertainment experience today.

Conclusion

The journey through the world of display resolutions reveals a fascinating evolution in how we perceive and interact with digital content. From the foundational clarity of Full HD to the breathtaking detail of 4K and the nascent, ultra-high fidelity of 8K, each technological leap has redefined our expectations for visual quality. Understanding these distinctions is not merely an academic exercise; it’s a practical necessity for anyone looking to make an informed decision about their next screen, whether it’s for work, play, or pure entertainment.

Ultimately, the “best” resolution isn’t a fixed standard but a dynamic choice tailored to individual priorities. For many, 4K has emerged as the undeniable sweet spot, delivering an exceptional balance of visual prowess, widespread content availability, and increasingly accessible pricing. It provides a tangible upgrade over FHD without the significant compromises associated with 8K‘s early adoption phase. As technology continues its relentless march forward, we can anticipate further innovations, but the core principles of pixel density, hardware compatibility, and content ecosystems will remain central to the display experience.

Summary of Key Points

To recap, display resolution refers to the number of pixels on a screen, expressed as width x height. More pixels generally mean sharper images and greater detail.

Full HD (FHD), or 1920 x 1080, remains a highly accessible and compatible resolution, offering good visual quality for everyday tasks, competitive gaming (prioritizing frame rate), and budget-conscious users. Its advantages include affordability and low hardware demands, but it lacks the sharpness of higher resolutions on larger screens.

4K resolution (3840 x 2160), also known as Ultra HD (UHD), provides four times the pixels of FHD. It delivers stunning detail, increased screen real estate for productivity, and an immersive experience for gaming and media consumption. The 4K content ecosystem is robust and growing, making it the current sweet spot for most premium experiences. However, it demands more powerful hardware and can be more expensive.

8K resolution (7680 x 4320) is the cutting edge, boasting four times the pixels of 4K. It offers unparalleled detail and realism, particularly on very large screens, and is ideal for specialized professional applications. Its disadvantages currently outweigh its benefits for most consumers due to extremely high cost, severe lack of native content, and immense hardware requirements.

When choosing a display, consider your primary use case (gaming, streaming, productivity), your budget, the screen size, and your desired level of future-proofing. 4K currently offers the best balance for most users, while FHD remains a strong budget-friendly option.

The Future of Display Resolutions

The trajectory of display resolutions is undeniably upwards, driven by consumer demand for ever more immersive and realistic visual experiences. While 4K is firmly established as the current standard for premium viewing, the future promises continued innovation. We can expect 4K to become even more ubiquitous, eventually replacing FHD as the baseline for most devices. This will be accompanied by increasingly affordable 4K displays, making high-definition visuals accessible to everyone.

For 8K, its path to mainstream adoption will likely be slower and more deliberate. The challenges of content creation, distribution, and the sheer hardware power required are significant. However, advancements in AI-powered upscaling will continue to improve, making lower-resolution content look increasingly impressive on 8K screens. As 8K content slowly emerges, likely starting with specialized fields and potentially AR/VR applications, the technology will gradually mature and become more viable for discerning consumers, particularly those with very large displays.

Beyond just pixel counts, the future of displays will also focus on other crucial advancements. We’ll see further development in HDR (High Dynamic Range), delivering even greater contrast and vibrant colors. Higher refresh rates will become standard across more resolutions, benefiting gamers and providing smoother motion for all content. Panel technologies like OLED and Mini-LED will continue to evolve, offering superior black levels and brightness. Ultimately, the goal is to create displays that are not just technically advanced, but that seamlessly blend into our lives, offering visuals so lifelike they become indistinguishable from reality, paving the way for truly transformative digital experiences.

Frequently Asked Questions

What is the main difference between 1080p, 4K, and 8K?

The main difference lies in the pixel count. 1080p (FHD) has 1920×1080 pixels, 4K (UHD) has 3840×2160 pixels (four times FHD), and 8K has 7680×4320 pixels (four times 4K, sixteen times FHD). More pixels mean greater detail and sharpness.

Do I need an 8K TV in 2025?

For most consumers, an 8K TV is not necessary in 2025. Native 8K content is extremely limited, and the visual benefits over 4K are often negligible at typical viewing distances, especially on screens smaller than 65 inches.

Is 4K worth it for gaming?

Yes, 4K is worth it for gaming if you have a powerful graphics card capable of driving games at playable frame rates. It offers a significantly more immersive and visually stunning experience compared to FHD, bringing game worlds to life with incredible detail.

What internet speed do I need for 4K streaming?

For reliable 4K streaming, a stable internet connection of at least 25 Mbps (Megabits per second) is generally recommended by most streaming services. For 8K in the future, much higher speeds (50-100 Mbps) would be required.

Does screen size affect how I perceive resolution?

Absolutely. On a smaller screen, even FHD can look very sharp due to high pixel density. On larger screens, the benefits of 4K and 8K become more apparent as the increased pixel count helps maintain sharpness and prevent visible pixels at closer viewing distances.

What is “upscaling” and how does it relate to resolution?

Upscaling is a process where a display’s processor takes lower-resolution content (e.g., FHD) and intelligently expands it to fit a higher-resolution screen (e.g., 4K or 8K). While it can significantly improve the appearance of non-native content, it never truly matches the crispness of native content rendered at the display’s full resolution.

Hi, I’m Nghia Vo: a computer hardware graduate, passionate PC hardware blogger, and entrepreneur with extensive hands-on experience building and upgrading computers for gaming, productivity, and business operations.

As the founder of Vonebuy.com, a verified ecommerce store under Vietnam’s Ministry of Industry and Trade, I combine my technical knowledge with real-world business applications to help users make confident decisions.

I specialize in no-nonsense guides on RAM overclocking, motherboard compatibility, SSD upgrades, and honest product reviews sharing everything I’ve tested and implemented for my customers and readers.